Spatial AI Agents, Monitoring Cameras, Exoskeleton Wearables, Robotic Cats and Dogs, and Humanoid Robots …… Guided by the theme of “Enchant Everything with AI,” Maker Faire Shenzhen 2024 gathered a host of AI hardware innovators to explore the rich possibilities of AI-industry integration.”

AI technology is developing rapidly, especially LLM (Large Language Modeling), AI, tinyML (Tiny Machine Learning) and other technologies, which are impacting human society. From smart home to autonomous driving, from healthcare to industrial manufacturing, AI technology is changing the way we live, and is driving industrial innovation and development.

Maker Faire Shenzhen 2024, the international summit of makers globally, which ended last week, demonstrated the appeal of the theme “Enchant Everything with AI”. During the two days of Vanke Design Commune’s main exhibition area and forum activities, the foot traffic reached 350,000 + people.

Eric Pan, the founder of Seeed Studio and Chaihuo Makerspace, said frankly about the relationship between makers and AI hardware at the “Night Talk on AI Hardware” event on the eve of Maker Faire Shenzhen 2024,

“Enchant Everything with AI is more of a vision. In the real maker community, less than 0.5% of them use AI in their products. In comparison, the AI content in the Shenzhen maker community is already high, but at Bay Area and other parts of the world, we see that makers are still working with the Arduino from ten years ago. Therefore, our slogan this year is ‘Enchant Everything with AI’, and we want to give people the possibility to do that.”

Under the theme of “Enchant Everything with AI”, Maker Faire Shenzhen gathers a group of AI hardware makers to explore the rich possibilities of the integration of AI and industry.

In this era where everything is related to AI, Maker Faire Shenzhen will work with all makers to create a better future.

Below is a list of 11 AI hardware projects exhibiting at Maker Faire 2024. Many of them are still in their early days, but as several makers in the “Nightly AI Hardware Talks” said, it’s precious to keep the passion for creation and the heart of youth.

01 SenseCAP Watcher

AI Agents for Smart Spaces

SenseCAP Watcher is the world’s first AI IoT device for monitoring physical space, originally unveiled at Embedded World April 2024, by Seeed Studio.

As a spatial management AI agent device, it combines AI technology with the IoT, combining locally-run tinyML models with cloud-driven large-scale models to understand and respond to user commands and provide accurate feedback on environmental awareness and interactions through an integrated camera, microphone, voice-interactive speaker, and multiple sensors.

Main hardware configuration: 1.46-inch circular touchscreen, OV5647 camera module, Himax HX6538 chip with Cortex M55 and US5 cores.

02 Petoi OpenCat

Programmable Robotic Dogs and Cats

OpenCat is an open source quadrupedal robotic pet framework developed by Petoi, based on the Arduino and Raspberry Pi platforms. Robotic dogs and cats built on this platform are capable of complex movements such as walking, running, jumping and backflips.

You can add various sensors and cameras to it to enhance perception, or you can inject artificial intelligence capabilities by installing a Raspberry Pi or other AI chips such as the Nvidia Jetson Nano.

You can also use the OpenCat platform to develop a wide range of robotics, AI, and IoT applications, including robotic dogs with autonomous mobility and object detection, ROS-based vision and LIDAR SLAM, and imitation learning using miniature machine learning models.

OpenCat has been used for robotics education in K12 schools and universities to teach students STEM, programming, and robotics.

03 Motion-assisted robot π

A lightweight exoskeleton for lower limb assistance

Πis a lightweight lower limb exoskeleton product, which highly integrates ergonomics, power, electronics and AI algorithms and other core technologies, can sense the trend of lower limb movement and timely output of assistance, which can effectively reduce the burden on the legs to provide sports protection, so that the cardiorespiratory exercise is more balanced.

The device has a fashionable and cool appearance; light weight, only 1.8kg; large assistance, the maximum exercise assistance can be up to 13Nm; flexible waist circumference adapts to a wider range of body types; easy to put on and take off; unique block-type dismantling and folding structural design, occupies a small volume, easy to store; the battery can be replaced; the field environment is suitable; AI intelligent learning algorithm automatically matches the walking habits, which can be widely used in various areas of life and work.

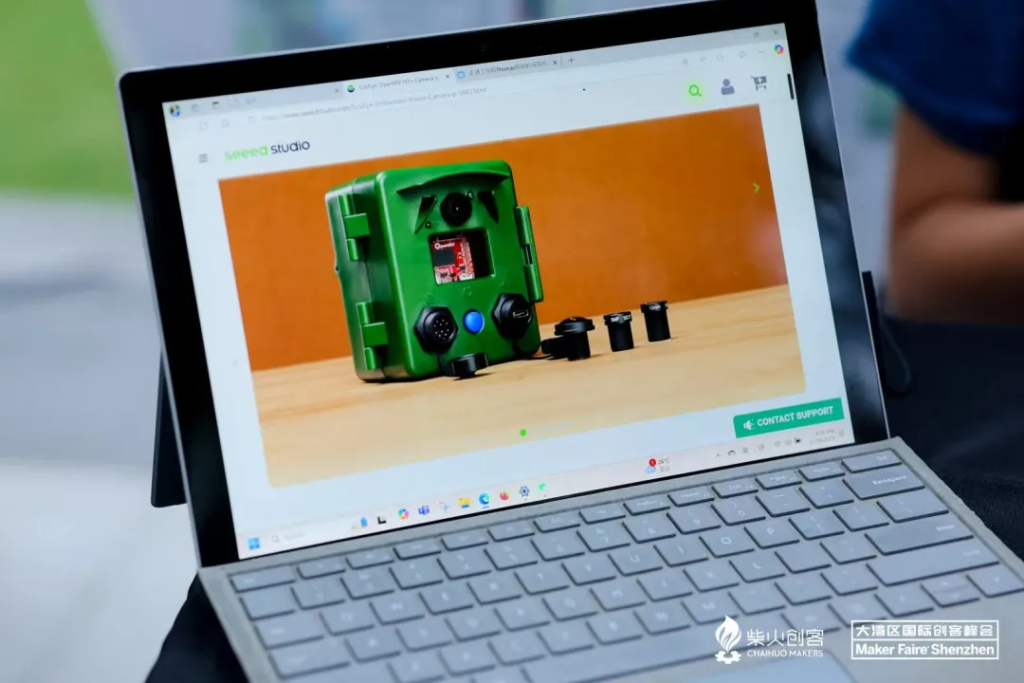

04 ecoEye V10

An AI monitoring camera

Developed by the Provincial Key Laboratory of Coastal Zone Environment and Resource Research (SASE Lab) at Westlake University, the ecoEye V10 camera is a portable camera with machine vision capabilities designed for remote deployment.

Based on the openMV H7 Plus cam, it is easy to set up and suitable for a wide range of applications. The built-in intelligent power management module supports long term operation and can be integrated with solar panels, countless sensors and other external devices in a portable and waterproof housing for use in harsh environments.

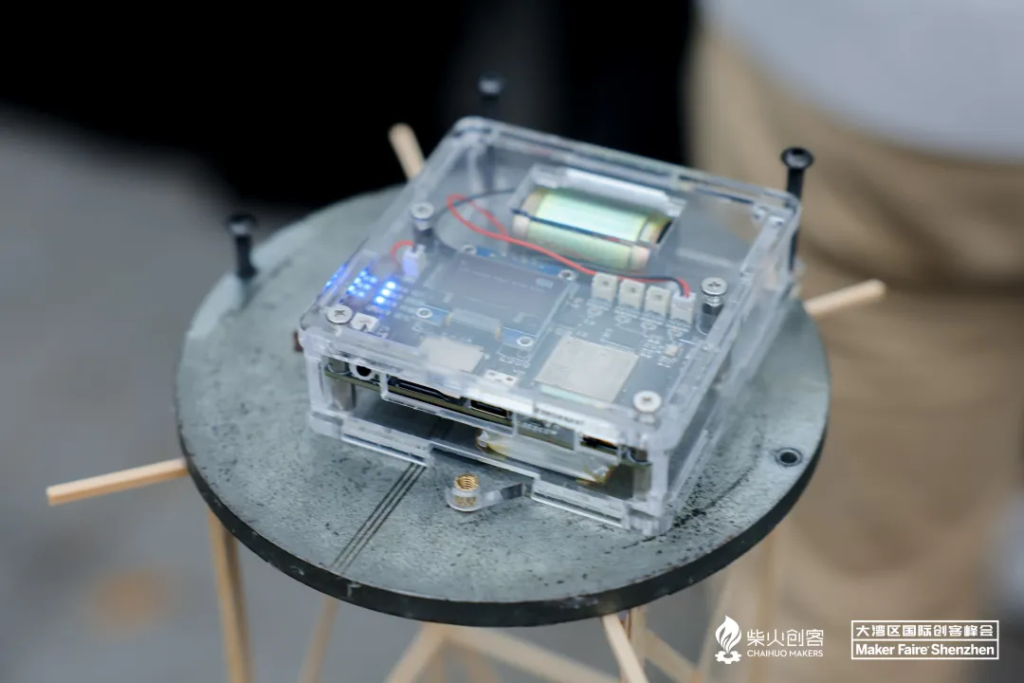

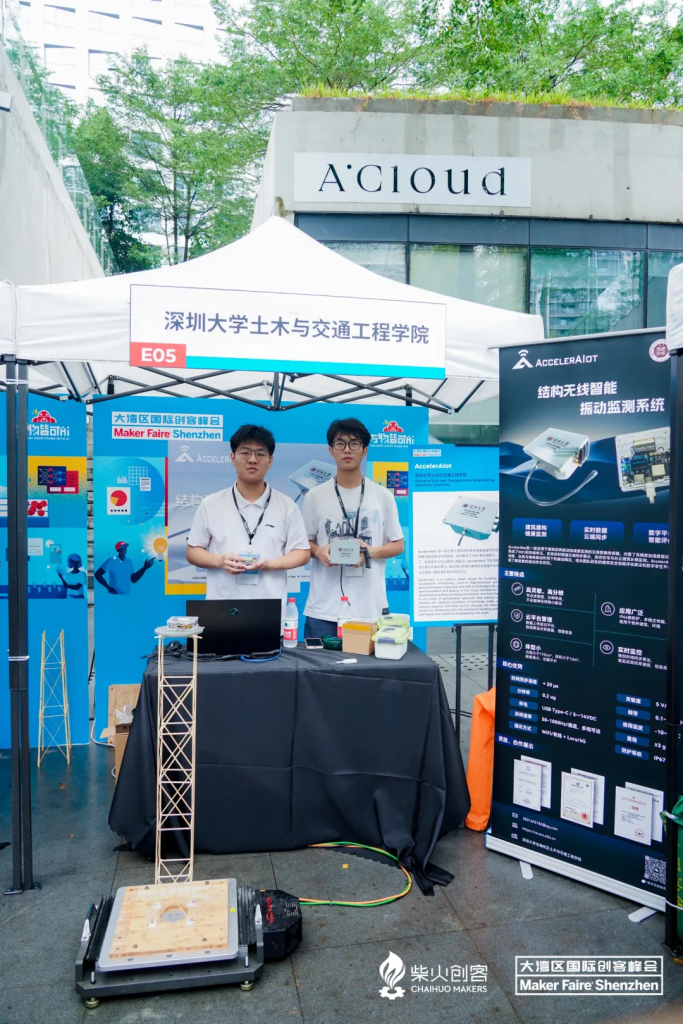

05 AccelerAIot

A Building Vibration Monitoring Terminal

Developed by the School of Civil and Transportation Engineering of Shenzhen University, AccelerAIot is a wireless intelligent sensor applied to the monitoring of vibration and acceleration of building structures, with a built-in high-precision acceleration and vibration sensing module, and integrated WiFi and storage unit, supporting real-time data synchronization and display in the cloud, suitable for long-term monitoring of residential and office buildings.

AccelerAIot can monitor the vibration of buildings under the action of earthquakes, typhoons and subway vibrations. Combined with the intelligent building safety assessment algorithm and digital twin platform developed by the team, it can help owners visualize the vibration safety status of their houses.

06 GPT One-Shot Ask Big Button

A new desktop-level search

Various big models at home and abroad are all over the place, it’s time to update our habit of using search engine. ABI DAO designed a desktop computer next to the big button, as long as there is a question, just give a shot to ask, the big model will give the knowledge it knows. It’s free of charge.

Maker Liang (Liang Hong’en) is a witness of China’s maker movement, a representative of the first Maker West Tour, and an old member of SZDIY Hackerspace. From 2014 till now, Liang has been participating in and promoting the maker activities in Shenzhen, China, and has experienced the whole process of the maker movement, from underground to above ground, and from the cloud back to the surface.

At the “Night Talk AI Hardware” event on the eve of Maker Faire, Liang revealed that this device only took him a week to complete.

07 XGO Robot Dog and Double Wheeled Foot

Designed for student desks

Founded in November 2020, Luwu Intelligence is a company focusing on desktop-level quadruped and wheeled foot robotics technology and products, with core team members from the State Key Laboratory of Robotics Technology and Systems at Harbin Institute of Technology, and its XGO series of products are loved by robotics developers all over the world. The company brought two projects to Maker Faire: XGO Robot Dog and XGO Biped.

XGO2 is a 12-degree-of-freedom desktop-level AI robot dog, equipped with a three-freedom robotic arm and gripper on the back, and built-in Raspberry Pi CM4 computing module to realize Al edge computing applications. It can realize omnidirectional movement, six-dimensional attitude control, attitude stabilization and various motion gaits, and is equipped with a 9-axis IMU, joint position sensors and current sensors to feed back its own attitude, joint angle and torque, which can be used for internal algorithm and secondary development. It supports graphical, Python and ROS programming of the robot dog on mobile or computer to develop AI applications.

XGO-Rider is a desktop-level dual-wheeled platform based on Raspberry Pi, with built-in Raspberry Pi CM4 modules to realize AI edge computing applications, using 4.5KG.CM all-metal magnetic encoding bus serial servo as the joints, and FOC hub-integrated motors as the wheels, which can realize omni-directional movement, attitude stabilization, multi-motion superposition, and image-voice interaction, and the internal IMU is used for internal algorithm and secondary development, supporting Python and ROS programming. Algorithm and secondary development, support Python programming and ROS programming.

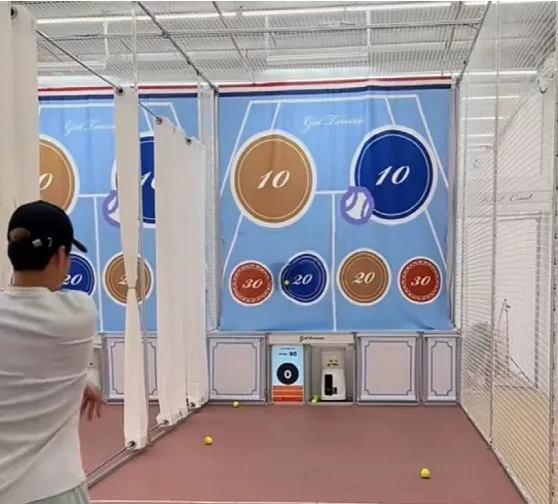

08 GetTennis

AI-enabled skill enhancement

GetTennis focuses on utilizing AI, smart hardware and other technologies to improve the skill level of tennis players by printing different score circles (10, 20, 30) on the hitting cloth and recording the score of each swing according to the landing point of the tennis ball, which helps users to better improve their skills.

GetTennis offers a complete self-service stadium construction program. Its 24-hour smart tennis stadium solution, which combines technologies such as visual recognition and smart hardware, allows tennis enthusiasts to see real-time feedback on their strokes, as well as players’ scores and rankings, increasing the fun of playing.

09 XPOLAR

A wearable sports recovery equipment

XPOLAR X2 Hot and Cold Compression Sports Recovery Device is designed and developed by Shenzhen Extreme Motion Intelligent Innovation Co. Ltd..

Through the whole pressure, ice, heat, hot and cold alternating therapies, it helps the body to recover after exercise, especially suitable for the recovery of multiple parts of the body, such as big and small legs, knee joints and so on. As it weighs only 1.2kg, it is particularly suitable for traveling athletes.

It integrates top international medical principles and advanced engineering technologies, and is able to provide advanced body recovery programs for medium to high-intensity trainers and sports enthusiasts, allowing professional athletes to use post-exercise recovery methods, such as low-temperature body function recovery techniques (ice packs), alternating hot and cold relaxation therapies, pressure-cycling relaxation, and lactic acid elimination techniques.

It also supports connectivity with smartphones, allowing AI Personal Physical Therapists to generate customized recovery plans based on type of exercise, fatigue level and body part.

10 Cyberpunk AI Dialog Bot

Retro and futuristic

Li Changliang is a veteran maker, with more than a decade of experience in designing and developing entertainment and consumer electronics products, and has designed a number of open source handheld game consoles, as well as being a tech blogger with 600,000 followers across the web. After graduating from the Institute of Automation of the Chinese Academy of Sciences, he focused on AI and natural language processing, and served as the director of the Kingsoft Research Institute, responsible for the company’s AI technology planning and research implementation.

At this year’s Maker Faire, Li Changliang exhibited a cute AI dialog robot. It uses an ancient picture tube to act as his mouth, in a similar scene often seen in sci-fi movies of the last century, and mechanical eyes that recognize faces and keep staring at you.

You can talk to him and ask it to use the tube to play videos, music, and even games, though the final look will vary.

11 WiseEye X+1

A Smart Terminal for the Blind

WiseEye X+1 is an intelligent assistive terminal that helps visually impaired people to live a quality life, designed by a team of students from Shenzhen Institute of Technology. It integrates AI technology, and its core functions include object recognition, text reading and full-scene interpretation. Through the innovative application of open-source visual Q&A models, it realizes real-time conversion of scenes into voice descriptions, providing visually impaired people with the ability to access information quickly.

The team shared their creation story: after many rounds of long time testing and user feedback, we deployed the model in a portable edge computing unit, realizing local processing of video information and effectively meeting the urgent needs of users for privacy and security. In the rapid iteration of nearly four months, according to the continuous optimization of the product, we have won the affirmation and high expectation of more and more visually impaired friends. We will continue to assume the role of guarantor of quality life for the visually impaired and make unremitting efforts to provide better assistive technology!